The disruption delay

How long it takes until disruptive changes are picked up by the mainstream

The disruption delay

This blog post is about an observation I made pondering the slow adoption rates of several technologies in IT that I consider being game changers. My claim is:

It takes about 20 years until a disruptive IT technology arrives at mainstream.

With “disruptive” I mean that the technology will significantly change the way we do or use IT.

E.g., Open Source Software (OSS) changed the way we do IT, i.e., we create, build and sometimes even run software differently. Yet, OSS did not change a lot how IT was used, i.e., which kinds of solutions we build with it. The World Wide Web (WWW) on the other hand massively changed the way, how IT is used. E.g., e-commerce would be unthinkable without the WWW. It also changed the way we do IT a bit, but the biggest impact was how it changed the way IT is used.

Some observations

But what observations led me to my claim and why 20 years? Are 20 years not a big too long?

Let us start with the observations I made and then augment them with some other disruptive changes we have seen before in IT. Then things should become clearer.

The first observation that made me wonder was how most companies handle smartphones. Smartphones revolutionized customer interaction. In simple words: “The customer does not go to the Internet anymore. The Internet goes with the customer.”

From a product manager’s perspective this means, the options to interact with your customers, to provide them with the best support possible in every single life situation just exploded. In 2016, mobile Internet traffic surpassed stationary Internet traffic (notebooks considered “stationary”) and the percentage of mobile Internet traffic is still growing.

And how was the typical response to this revolution? After several years of silently ignoring it, around 2015 companies started to consider designing their web UIs “mobile first” – which in practice usually meant to make the web sites responsive.

The possibilities to address customers differently in different situations? Usually nil return. Usually the same use cases, the same flows, the same input and output data – sometimes the mobile version a bit crippled, i.e., some fields or functions removed as many desktop web pages do not work too well on the smaller mobile device screens with virtual keyboards.

We have not even started to take the meanwhile also several years old promise of contextual computing into account, that mobile devices automatically change their capabilities based on their environment – e.g., at home offering other capabilities than in the car, at work or in a shopping mall.

Now remember that smartphones started their triumph in 2007. We live in the year 14 of smartphones and still most companies do not really understand what they mean and thus do not leverage the options smartphones give them.

Another observation I made was the adoption of public cloud services. Luckily we left the times behind in Germany where I live to reject public cloud adoption in a knee-jerk way, because “it is insecure” or “the USA will steal our data” or some other ostensible reason to avoid dealing with cloud technology.

Yet, public cloud adoption is still in its infancy in most places. We see a lot of “private clouds” which in the end offer less than traditional virtualization technologies. And if public clouds are used, usually all differentiating features, especially the managed services, are carefully removed to “avoid vendor lock-in”, another bogus argument (see the footnote for more details). 1

Most cloud adoptions are still limited to compute and storage virtualization – only provisioned by the ops department via request, thus not even self-service, let alone elastic scalability. This deprives you from most contemporary architecture and technology options and leaves you with a huge competitive and financial disadvantage compared with those companies who know how to leverage cloud technologies (again: I discussed this in more detail in “The cloud-ready fallacy”).

We are in the year 15 of public cloud. In 2006, AWS started their first cloud services like EC2 and S3 – and still, many companies do not use a lot more than that. 15 years later! I just recently heard the argument that you must not use Amazon SQS because it would be too immature. 17 years after its launch (SQS was launched for public usage in 2004) and billions, if not trillions or more messages delivered by it!

Both observations made we wonder and thus I started to dig deeper.

A short trip through IT history

I thought about some other topics like the adoption of big data technologies, IoT and some more and wondered why it takes so long until people understand their potential and adopt them.

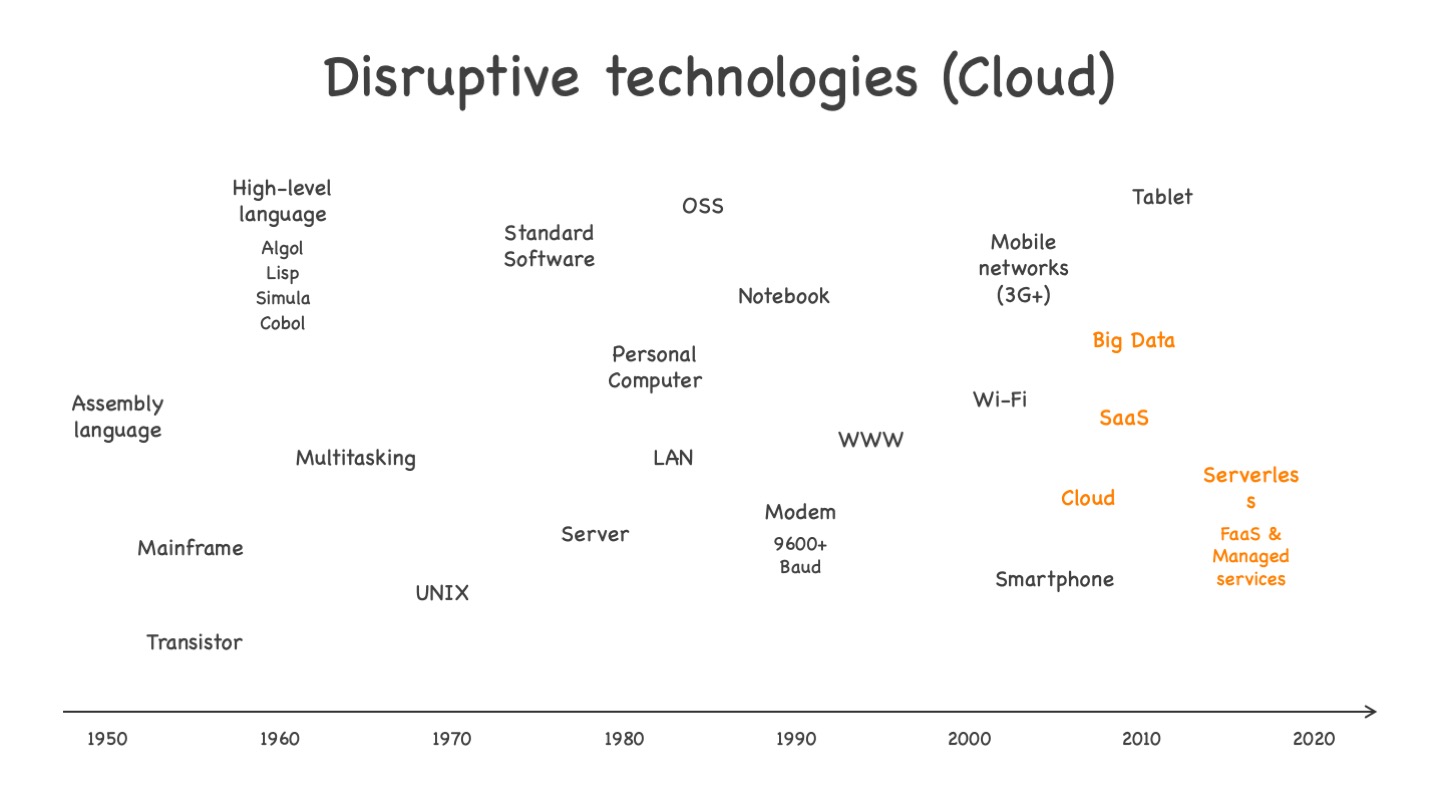

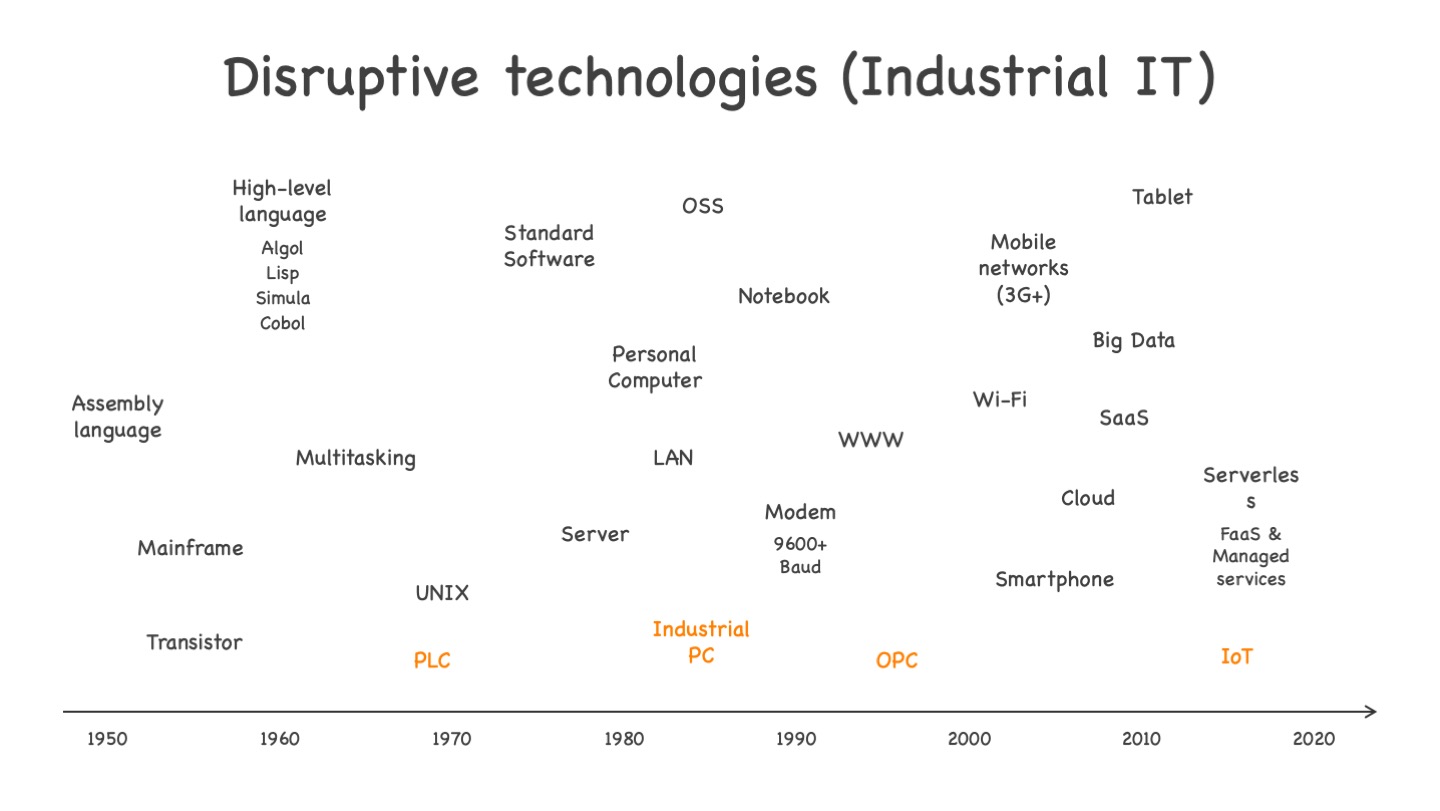

And so I tried to understand if there is a recurring pattern. Therefore, I gathered several innovations from the past that changed the way how we do or use IT. I will briefly go through the list in the remainder of this section. To not bore you, I will not discuss every item on the list, but just a few of them. Also, the list does not claim to be complete. These are just several technologies with disruptive effects that came to my mind.

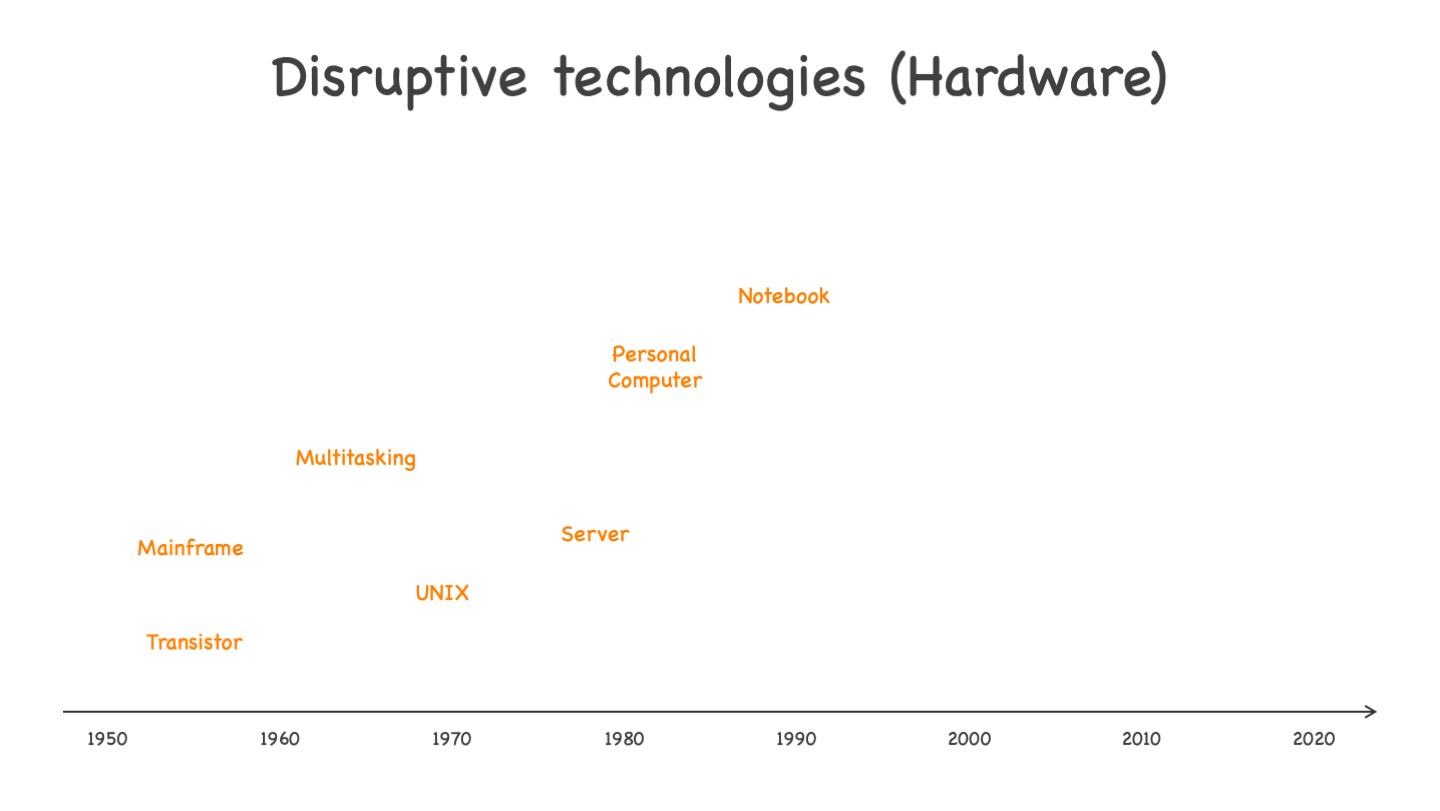

Let us get started with the hardware evolution.

On the hardware side the most notable disruptive technologies were:

- Transistors (mid-1950s) – Making computers usable in commercial contexts

- Mainframes (mid-1950s) – Being the first commercial computer delivery form

- Multitasking (mid-1960s) – Multiplying the perceived performance and enabling online compute for multiple users

- UNIX (late 1960s) – And siblings, fueling the server revolution (I know that UNIX actually is software, but it affected the move from mainframe to servers heavily; that is why I put it here)

- Servers (mid-1970s) – Making computers affordable and starting to make it a mass product

- Personal computer (early 1980s) – Bringing computers to the business departments and into our homes

- Notebooks (late 1980s) – Freeing us from the location bounds

If you look at the availability dates and when those technologies became mainstream, you see a large time gap. And many companies still struggle to get rid of their mainframes even if they had more than 40 years time to do so. 2

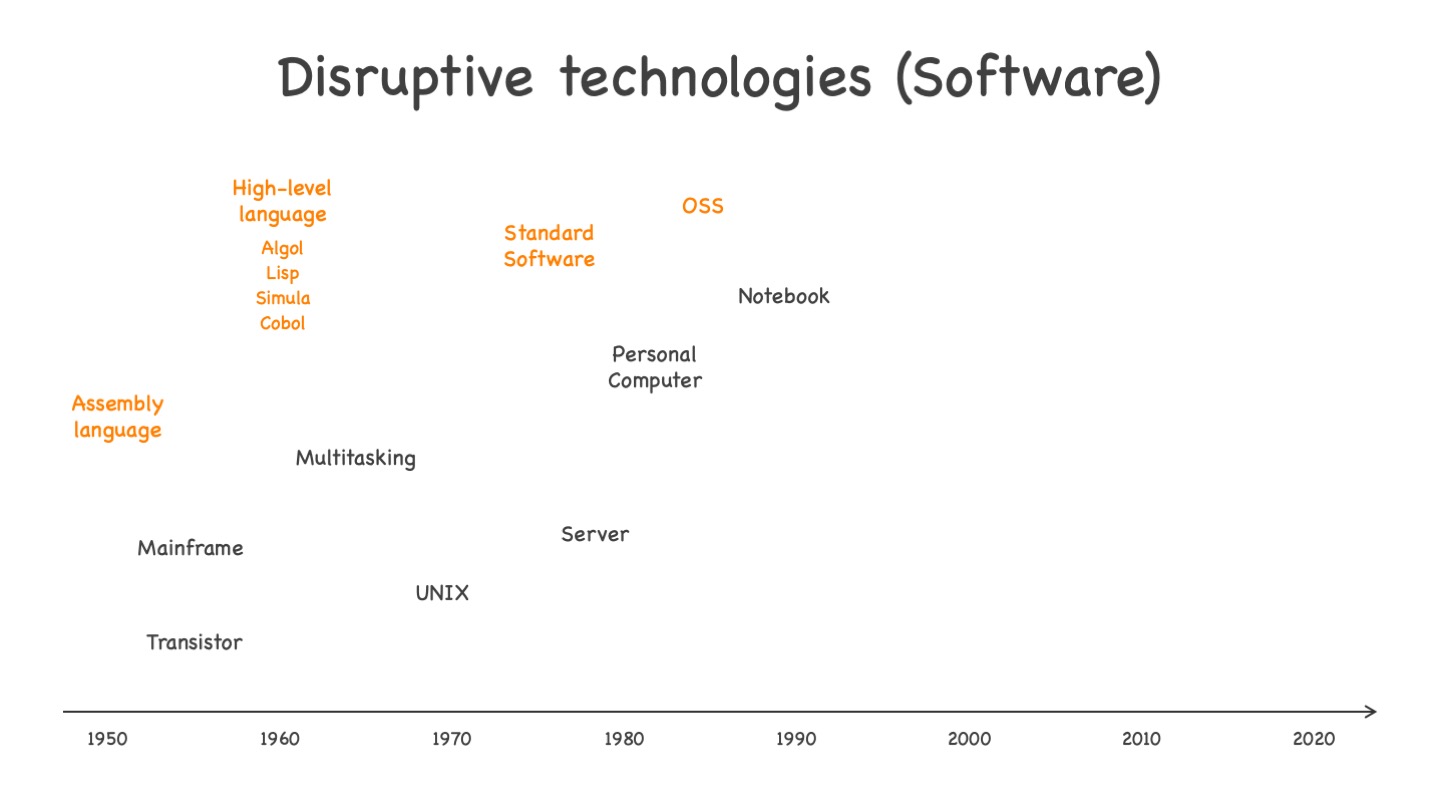

On the software side the most notable disruptive technologies were:

- Assembly language (around 1950) – Making programming accessible for a wider range of people by abstracting away machine level details

- 3GL high-level languages (end of 1950s) – Making programming (relatively) simple for humans. Note that all programming paradigms that are still predominant today (imperative, object-oriented, functional) already were available back then

- Standard software (mid-1970s) – Adding “buy” to the software acquisition options. Before that “make” was the only option

- Open-Source Software (OSS) (revival in the mid-1980s 3) – enabling completely new levels of software development productivity

Looking at, e.g., OSS we can observe that it took about 20 years from its revival in the 1980s until it became mainstream in commercial software development.

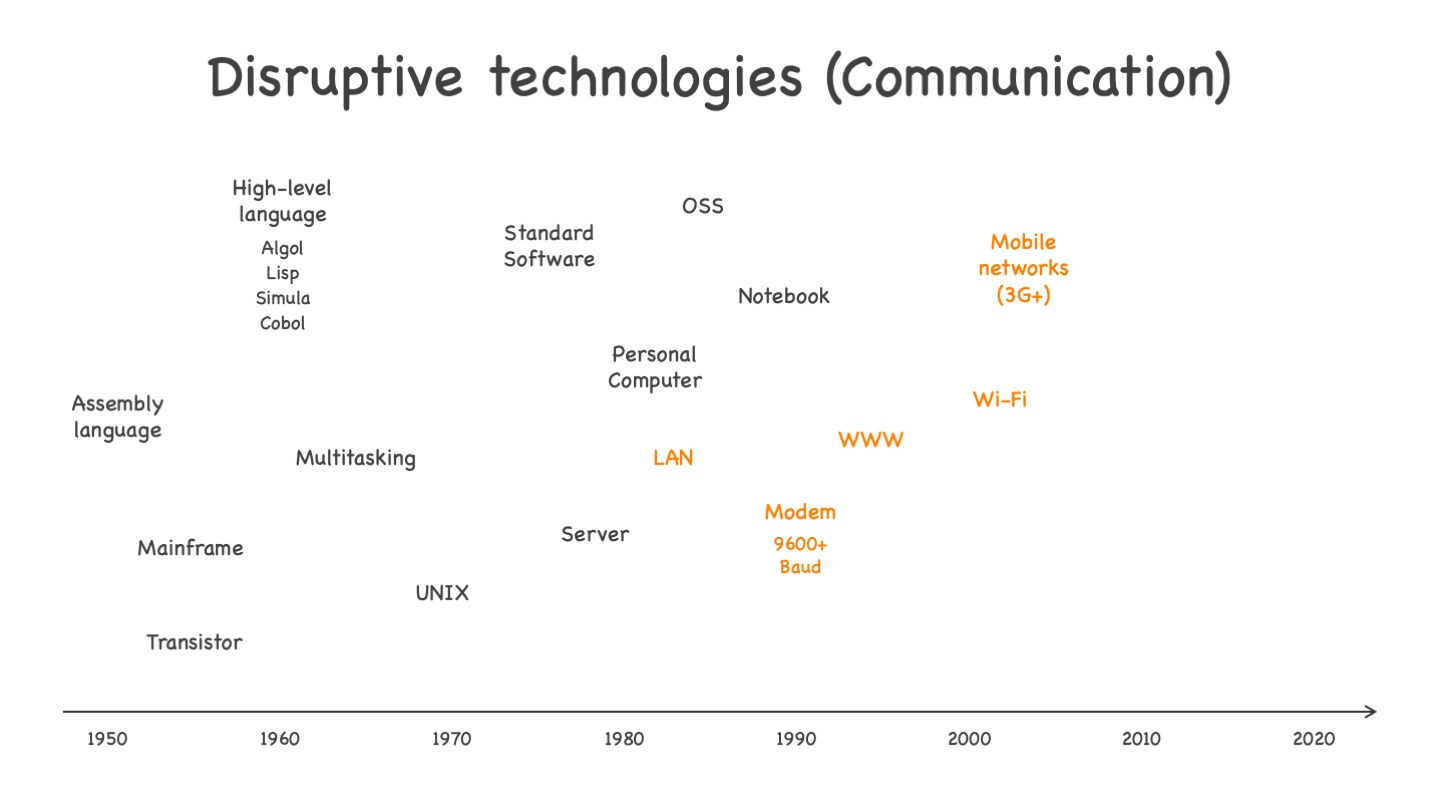

On the communication side the most notable disruptive technologies were:

- Local area network (LAN) (early 1980s) – Enabling simple communication between computers and new types of software like client-server solutions

- Modem (9600+ Baud) (around 1990) – Easy access to the Internet and remote access at a reasonable price, speed and quality

- World-Wide Web (WWW) (early 1990s) – We all know how disruptive this one was!

- Wi-Fi (early 2000s) – No more annoying plugging and unplugging of network cables; work and surf wherever you are

- Mobile networks (3G+) (mid-2000s) – Extending location independence to virtually everywhere

The WWW is here for more than 20 years now, but it took until the 2010s that it really became company mainstream, that companies understood its consequences like, e.g., e-commerce and naturally deal with it.

And there are quite some hotels, if I use their Wi-Fi, I still think that Wi-Fi is not yet mainstream (at least in Germany 4). But more important than that, these technologies paved the path for the next class of technologies.

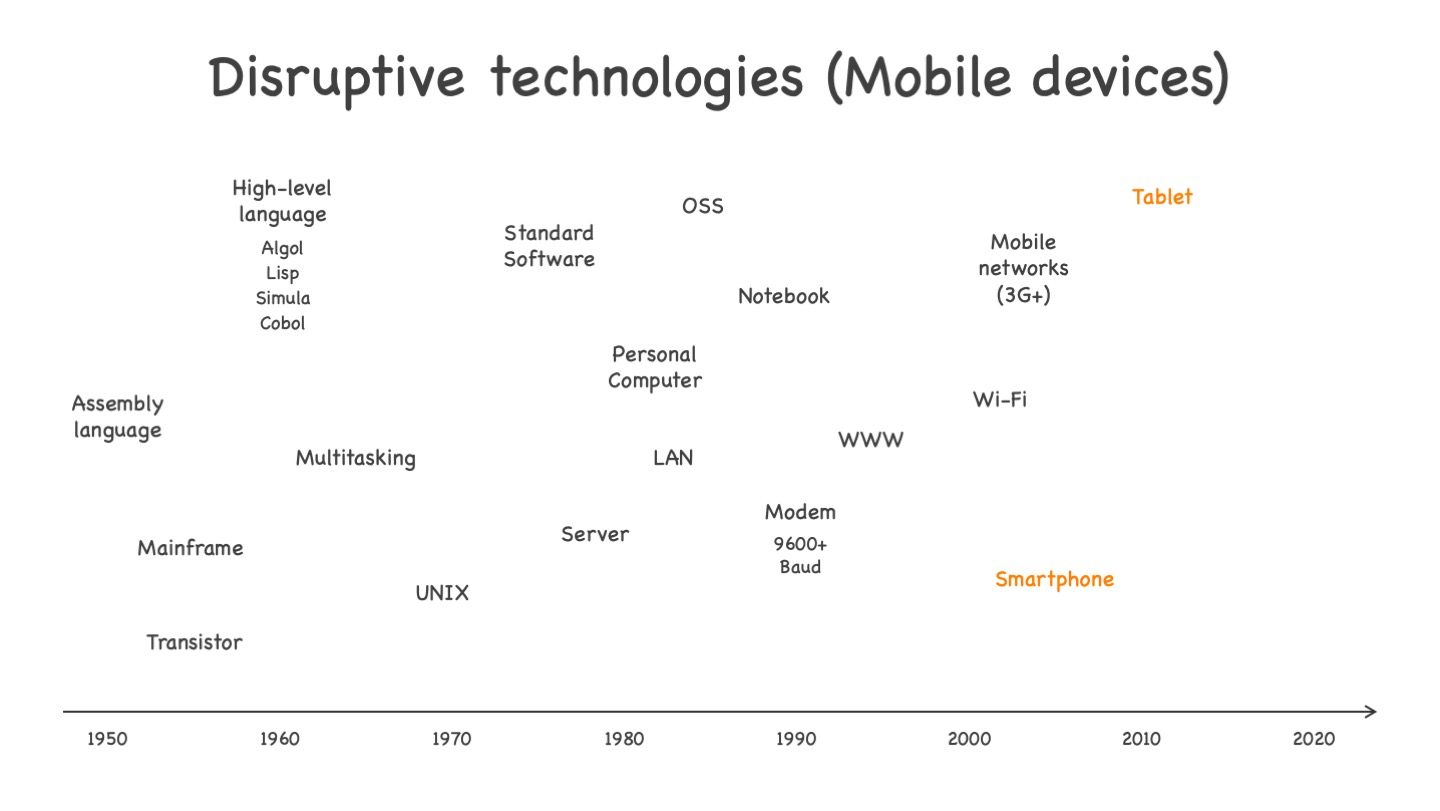

- As mentioned before, the era of smartphones started 2007 with the iPhone, causing that “the Internet walks with the people instead of the people having to walk to the Internet”

- In 2010, again Apple presented the iPad, giving rise to tablets, probably the preferred gadget at home these days

In 2014, Apple sounded the bell for wearable devices with the Apple Watch. Still, their effect is not yet even close to the effect of smartphones.

As I discussed the lag effect of smartphones in the beginning, let us move on to the next class of technologies.

- As written before, in 2006 AWS started the public cloud era with the launch of some IaaS-level services like S3 and EC2

- The cloud computing paradigm also gave rise to SaaS (even if some offerings already existed before) in the late 2000s

- Also at the late 2000s, big data approaches started to rise, also heavily fueled by the cloud scale-out paradigm

- In the mid-2010s, serverless, being a combination of FaaS and managed services, started to rise

I already discussed in the beginning, how many companies are still stuck with partial virtualization (the programmatic virtualization of networking is not available in most companies’ cloud approaches) and data warehouses on the data side, not having understood the options, public cloud provide today, by any means. Their thinking model is still stuck in the late 1990s or early 2000s (depending on the company).

Finally, when looking at the evolution of industrial IT, we see:

- PLC (late 1960s) – Making production machines “intelligent”

- Industrial PC (mid-1980s) – Bringing high-level computing and process control to the shop floor

- OPC (mid-1990s) – Connecting the shop floor with the enterprise IT

- IoT (mid-2010s) – Embedded computing, industrial computing and enterprise computing becoming inseparable. We can only guess where it will lead in the future

Summing up

This was a quick (and incomplete) journey through some of the most disruptive technologies in IT over the course of the past 70 years. If we look at the dates the technologies became available and then think when they became mainstream, it basically proves my claim from the beginning:

It takes about 20 years until a disruptive IT technology arrives at mainstream.

For some technologies it might take a bit shorter, for some others it might take longer. But in average, 20 years adoption time turn out to be a good rule of thumb. At least in Germany, where I live and make most of my observations, this is definitely true.

If we look around at the moment, we can observe that the mainstream has understood the WWW and e-commerce which started in the 1990s. The same is true for OSS and notebooks. Well, maybe COVID helped a bit with notebooks in companies. (Reasonable) Wi-Fi is also on the verge of becoming self-evident.

But everything that started less than 20 years ago, is neither really understood nor adopted by the mainstream. This does not mean that nobody uses it and that there are not enough examples to learn from. It is all there. But still it takes around 20 years until the mainstream understands and adopts it – like it or not.

Maybe this adoption lag is not only true for disruptive technologies, but for all types of technologies. I do not know. I only looked at technologies that were game changers in some way.

Note that using something does not necessarily mean one has understood it. E.g., companies started building mobile apps in the early 2010s. Still, they have not understood the potential of smartphones at all. As a consequence the have not really adopted the technology. They only used it in some superficial way. Real adoption requires full understanding of the technology and the options it provides.

Now what?

To be honest: I was more than just a bit bewildered when I realized this long delay between the introduction of a new technology and its mainstream adoption.

But it really explained why very often I suggest my clients a solution idea including some more recent disruptive technology (less than 20 years old), I face plain resistance instead of thoughtful consideration.

E.g., if suggesting managed cloud services as part of a solution these days, you simply get a “No” (typically without any serious arguments) or an endless series of “Yes, but …” (which is a “No” in disguise) in many places.

It also does not make a difference if you can show that the solution costs a lot less in development, maintenance and production, that your time-to-market is a lot shorter and that the solution would improve availability and security. The fact that the technology is not yet understood makes it unacceptable.

For me, it is always a bit frustrating seeing companies throwing away significantly superior options for the wrong reasons, often letting the internal laggards dictate the speed of progress (which surprisingly often is the IT department and not the business department as one might expect).

I have not found a way yet to accelerate this understanding and adoption cycle and I am not sure if it is possible at all – otherwise, I guess, the lag would not exist.

Of course, this lag makes it easy for those who are open to test new options to come up with superior IT solutions – in terms of time-to-market, delivery speed, costs and dependability (including availability and security). But these companies are not the mainstream. They are the early adopters – and maybe the market disruptors of tomorrow.

Personally, I try to focus on those companies who are open to new ideas and help them supercharge their IT. I simply do not want to wait for 20 years until I can leverage an obviously superior solution option. But this does not solve the problem that we throw away tons of superior solution options in our industry every day, simply because it takes so long until they are understood.

I do not know how you handle the adoption lag. If you feel the same, let us talk. Maybe together we find some better ways to deal with it …

-

I described in “The cloud-ready fallacy” why the typical “vendor lock-in” arguments completely miss the point and how not leveraging the possibilities of the public cloud offerings in the end costs you a lot of time and money and leaves you with a significant competitive disadvantage. ↩︎

-

I know that reliability was still a huge pro for the mainframes in the early days of (UNIX-based) servers. But latest in the early 1990s, servers including some HA software caught up, providing the same level of reliability at a much lower price. I remember working in a company in the 1990s where we had a lot of workstations (SUN Spark workstations running SunOS 4.1.x) running critical 24x7 production software. They never failed while I was working in that company. They were running when I started there and they still were running when I left more than 2 years later. Some of them were up since the day of their commissioning and if nobody pulled the plug, probably they are still running. ↩︎

-

Open-Source Software (OSS) is actually available since the 1950s and was quite widespread back then. Still, in the 1960s, vendors discovered software as a salable asset (before that software was considered a byproduct to the core asset “hardware”) which resulted in a decline of OSS, yet never completely vanishing. In the 1980s, OSS experienced a revival that lasts until today. ↩︎

-

Interestingly, Wi-Fi and mobile connectivity are amazing in many countries that Germans tend to consider “less developed than Germany”. So much for realistic self-perception … ;) ↩︎

Share this post

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Pinterest

Email