The microservices fallacy - Part 10

Microliths

The microservices fallacy - Part 10

In this post we take a look at microliths as an alternative to microservices.

In the previous post we discussed moduliths as a possible alternative to microservices, when it makes sense to go for them and what preconditions must be met to use them successfully.

In this post we will continue with the second alternative I want to discuss in more detail: Microliths. Additionally, we will look at some problems that you might face if you decide for one of the discussed styles and what you can do about it.

Microliths

If you do not need to move fast and/or do not have multiple teams but – opposed to the modulith setting – have special runtime requirements in terms of very disparate NFRs, microliths might be a sensible choice.

They can also be a sensible choice if the preconditions for using microservices are met, but you are not able or willing to pay the price for microservices creating a fully distributed service landscape.

Finally, microliths can also be a sensible choice if you simply want to go for services for fashion reasons or alike without fulfilling any of the required preconditions. Microliths mitigate the most harmful effects of microservices that would hit you otherwise by adding a few constraints to the microservices style.

A microlith is basically a service that is designed using the independent module design principles which avoids calls between modules/services while processing an external request. This typically requires some status and data reconciliation mechanism between services to propagate changes that affect multiple microliths. The reconciliation mechanism must be temporally decoupled from the external request processing. 1

Having separated the processing of the external request and the communication between microliths, you can split your monolithic application in several smaller parts without being hit by the imponderabilities of distributed systems with a slam.

The trade-off is that you have to pay the price of temporally decoupled reconciliation between the microliths. An the other hand you do not need to deal with the problems that arise if a call to another service fails while processing an external request.

This also requires a design of mutual independence as microliths are not allowed to call each other while processing an external request which might feel unfamiliar for engineers who are used to think in layers, reusability and call-stack based divide and conquer techniques:

- All functionality needed for a use case (or at least a user interaction) must be implemented inside a single microlith.

- All data required to serve an external request must be in its database – if necessary, replicated from another microlith’s database via the data reconciliation mechanism.

- Reusable functionality needs to be provided via libraries or some other implementation or build time modularization mechanism, not via other services. 2

Such an architectural style feels a lot like going for many small independent applications. Each application handles a subset of the use cases of the monolithic application. A (logically) batch-like communication between services handles the status and data reconciliation. This is an architectural style that from my experience most software engineers (and also operations engineers) coming from an enterprise software development background can handle a lot better than full-blown microservices.

Some people also call microliths “microservices”. I do not.

While you could argue that microliths are microservices with just a few additional constraints applied, these constraints are exactly what make the difference.

Remember that Roy Fielding, the “inventor” of REST defined the REST architectural styles in his dissertation by applying 7 constraints to generic network communication. Any constraint added, changed or removed would have resulted in a completely different architectural style.

So, constraints are crucial in architectural design. Different constraints mean a different architectural style with different properties.

The seemingly minor constraint that a service must not call other services while serving an external request, makes a huge difference in practice. The service processing the external request does not need to wait for other services to complete the request processing, does not need to deal with failures that might happen while calling other services, is not confronted wth all the intricacies of distributed systems, but just with the subset that is already known from monolithic application landscapes.

That is why I do not call these types of services microservices, but microliths. 3

Microliths additionally make technology changes easier than moduliths because they are smaller. Thus, the units of migration are smaller and you might be able to migrate them microlith by microlith without needing to migrate microlith parts piecewise.

Handling long-running use cases

A last thought regarding microliths: Sometimes you have long-running use cases. A long-running use case is a use case where the actor does not expect the use case to complete immediately but at a later point in time. The actor usually triggers the use case and then gets notified later about its completion.

E.g., an insurance application is a long-running use case. You fill out the application form. Then a while later you might receive a mail with a PDF application attached to it that you need to sign and send back. Another while later you eventually receive the insurance policy.

A long-running use case may or may not consist of multiple temporally decoupled user interactions. Sometimes there is only a triggering user interaction in the beginning and (hopefully) some kind of completion notification at the end while all intermediate activities are invisible to the users. Sometimes there are intermediate interactions involved that are temporally decoupled from the first one and each other.

You might not want to handle such a long-running use case in a single microlith – especially if the intermediate activities span multiple business domains like in the case of an insurance application review (fraud assessment, credit assessment, insurance risk assessment, …).

To model such long-running use cases, you can use horizontal call chains. A horizontal call chain is a series of services that call each other asynchronously. Their processing is temporally decoupled. The first service notifies the second service (however this is implemented on a technical level). The second one eventually does its processing and notifies the next service. And so on, until eventually the last service notifies the original actor about the completion of the use case.

In such a setting, services call each other directly (message) or indirectly (events) to advance the execution of the use case triggered by the initial external request. While we create a microlith connection beyond data reconcilition, this connection is still temporally decoupled.

This kind of additional inter-service communication is fine and does not downgrade the runtime properties of the microliths involved, provided that:

- The asynchronous call does not impair any external user request processing if the call should fail.

- Notifications do not get lost on the way if a technical error occurs (depending on the technology chosen there are different means for that).

There would be a lot more to write about microliths. But I will leave it here. The idea and how they differ from microservices and moduliths should be clear.

Challenges and options

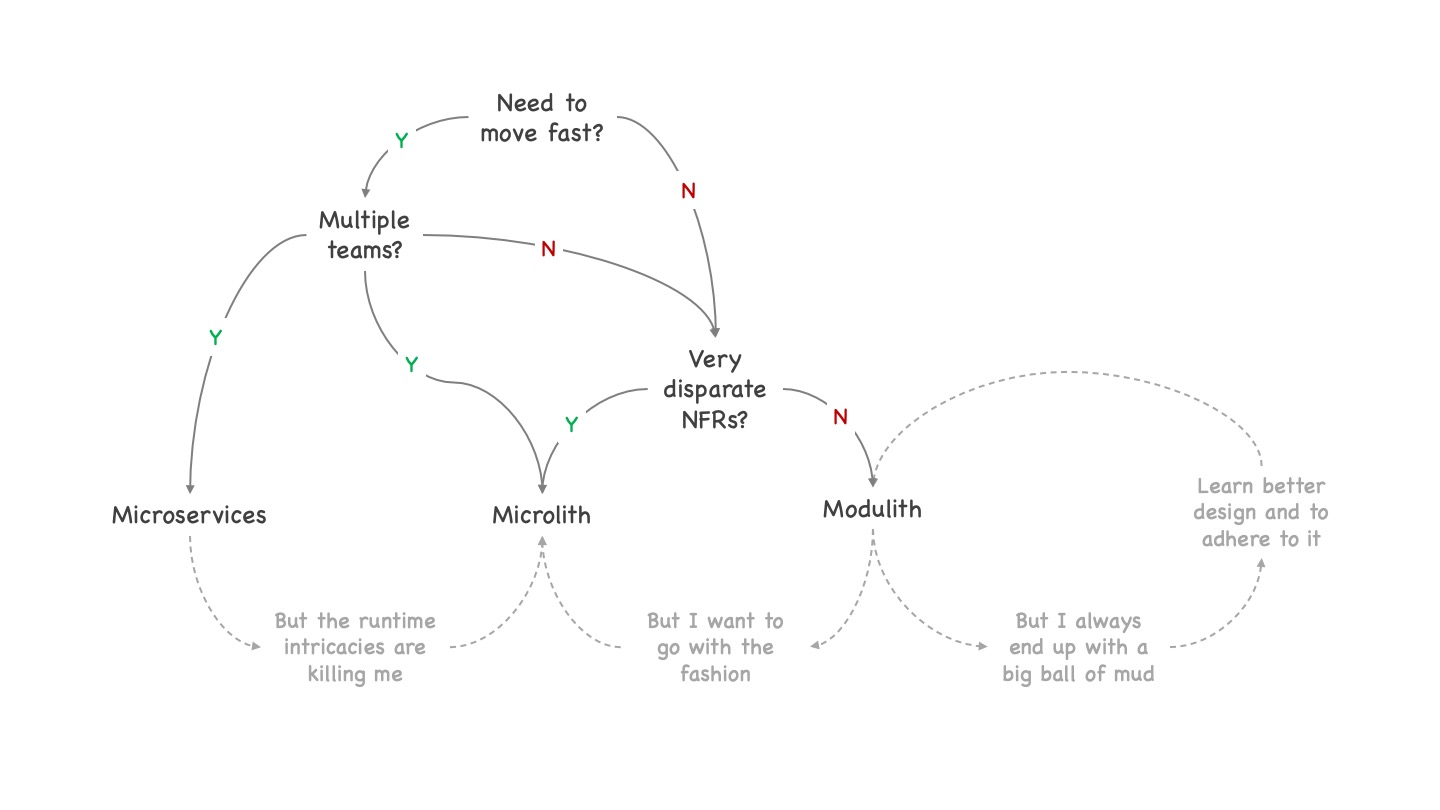

If you visualize the decision tree for choosing between microservices, moduliths and microliths, you get a figure like this:

Of course, these are not all decisions to be made. As I wrote before:

Basically, there are many options with many variants, all of them having different advantages and disadvantages depending on your specific context. Here I only will discuss two styles that are relatively close to microservices, yet having quite different properties that suit most typical enterprise contexts a lot better.

We only discussed two alternative styles where actually are many styles and variants possible. The same way we only looked at a few relevant decisions where many more decisions can influence the choice. Still, these are quite fundamental decisions and I often see them ignored in order to blindly follow the hype. Thus, I think the tree is certainly useful.

As written before: Depending on your concrete situation there may be more influencing factors leading you to a different decision. This is perfectly fine. We cannot discuss all potential influencing factors here.

No matter how you actually came to a decision you might face some challenges over time. Here I only want to discuss a few typical challenges and transition options to mitigate them.

The first challenge I want to discuss is that you decided for microservices and learn over time that you underestimated the runtime intricacies by far. You get overwhelmed by them. The typical enterprise response pattern in such a situation is to call for another piece of runtime infrastructure, expecting that it solves the problem – and continue without changing anything in development.

We currently see exactly this response pattern: To counter the challenges resulting from the imprudent introduction of, more and more complex infrastructure is piled up, hoping to mitigate the problems: Kubernetes, service meshes, event brokers, process engines, API management platforms (for managing internal “APIs”, which usually is more about managing services than actually APIs), etc.

Yet, the complexity of an unconstrained distributed system does not magically go away by throwing additional tooling on it. You need to balance safety and liveness. You cannot maximize both of it in distributed systems.

As liveness (“something good will eventually happen”) is a must in basically every commercial application, perfect safety (“something bad will never happen”) is not possible. A compromise fitting the individual needs must be found.

The call for infrastructure tooling is but the futile attempt to obtain perfect safety and liveness from the infrastructure while not having to care about it on the application development level.

Thus, while some tooling is perfectly fine (we do not need to make life harder than necessary), it must be clear that the effects of distribution will still hit on the application level. The intricacies and imponderabilities of distribution must be handled on the application level. The development team needs to ponder and handle them. The infrastructure tooling may support to a certain degree, but without explicit application development support, application liveness and safety will suffer.

If this turns out to be too challenging for the software developers involved (which often is the case 4), a possible option is to transition to microliths.

Microliths are still services, but they constrain general microservices in a way that most of the hard intricacies of microservices are nicely mitigated. This way microliths are a lot closer to what the typical enterprise software developer is used to and can safely handle.

Other challenges can occur if you decide against services and stick to deployment monoliths. One challenge can be the pressure to go with the hype. Even if I always write that you should not go with the hype, we must not underestimate the opposition and pressure that you face if you decide against an ongoing hype.

Sometimes it is simply not possible to resist that pressure. In this particular context it would mean that you need to yield to the microservices pressure. My recommendation in such a situation would be to go for microliths, not for unconstrained microservices to mitigate the worst risks. You can still call them “microservices” if needed to tame the desire for following the hype.

Another challenge when using moduliths can be that you always end up with a big-ball-of-mud like monolith, not a well-structured modulith. In that situation you need to work on your design skills. I will discuss this issue in some more detail in the next post (link will follow).

Many companies resorted to microservices instead. They were not able to master the required design skills and implementation discipline (not to violate the agreed upon design) and hoped that the enforced boundaries of microservices would solve their problems.

This is not an option. If you take that route you inevitably end up in a distributed ball of mud which means the worst of both worlds: Maintenance and evolution suffers at development time while at the same time operations suffers from having created a tightly coupled, brittle distributed system.

Or to express it more pointedly: You experience development hell due to lacking skills and discipline and as remedy you decide based on pure hope to extend hell to operations. At least for me, that sounds like a bad plan – maybe better work on design skills and implementation rigor. 5

Summing up

In this post we discussed the second alternative to microservices: Microliths. They are a sensible choice if you do not need to go fast and/or do not have multiple teams and do not have very disparate NFRs for different parts of your application. While they still create a distributed system, their constraints mitigate those effects of distribution that software engineers typically struggle most with.

Additionally, we discussed a few typical challenges that people often face after deciding for one of the three alternatives microservices, moduliths or microliths and how to respond to them.

As always there would be a lot more to say. But I will leave it here.

In the next and final post of this series, I will complement what I have written so far with a few general recommendations. Stay tuned …

-

This temporal decoupling is often called “asynchronous” which is not accurate as the communication style of the reconciliation mechanism does not matter. It can be either synchronous (e.g., Atom-based HTTP call) or asynchronous (e.g., via an event-based message broker). The key point is that the data reconciliation is temporally decoupled from serving the external request, i.e., is not coupled in any way to serving the external request. Due to this decoupling, potential failures in the reconciliation process do not affect serving the external request. ↩︎

-

To avoid introducing dependencies between the microliths through the back door, you must make sure that different versions of libraries (or whatever implementation or build time modularization approach your are using) providing common reusable functionality are backwards compatible. Otherwise you would force all users of the library in a lockstep release process when releasing a new library version – which is the opposite of what you want to achieve. ↩︎

-

The Self-contained Systems website (SCS) basically discusses the microlith style. The only difference to microliths is that SCS additionally require the UI to be part of the services. While this additional constraint is useful in certain contexts (e.g., for e-commerce web sites where this style originates from), I do not think that it is useful in all contexts. Still, besides this additional constraint SCS discuss the microlith style quite nicely. ↩︎

-

Not being able to handle an unconstrained distributed system is not a shame. Distributed systems and pondering their effects is particularly hard. It takes a lot of training to understand the effects of distribution and how to respond to them. The typical education for software developers barely touches distributed system design and while being employed most software developers never get the time to learn it. ↩︎

-

To be fair: The lack of proper designs and implementation discipline often is a consequence of the misguided feature pressure that can be seen in most companies. This pressure prevents better designs and penalizes implementation discipline. In such settings, first the required preconditions need to be established. I already discussed the issue in several posts, e.g. in the “Simplify!” blog series. Thus, I will not repeat the discussion here. ↩︎

Share this post

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Pinterest

Email