Reality check

When systems live in their own world

Reality check

Recently, I had two experiences within a few days that made me think regarding system dependability. In both situations, the systems acted detached from their surrounding reality and thus became confusing or even annoying – even if it would have been easy for them to detect their reality detachment.

A train in the past

The first experience was in a train. I traveled from Munich to Cologne. As it happens sometimes in German ICE trains, the information displays had a hiccup at the beginning of the trip, i.e., they did not display any information. About 30 or 40 minutes later, the system worked again. Probably someone restarted it.

Unfortunately, it worked detached from reality. Apparently, it assumed that it had been started at the beginning of the journey. As a result, it still showed Nuremberg, the first stop after Munich, as our next stop half an hour after we left Nuremberg. What made that situation particularly odd, was the fact that the information system also showed the current time.

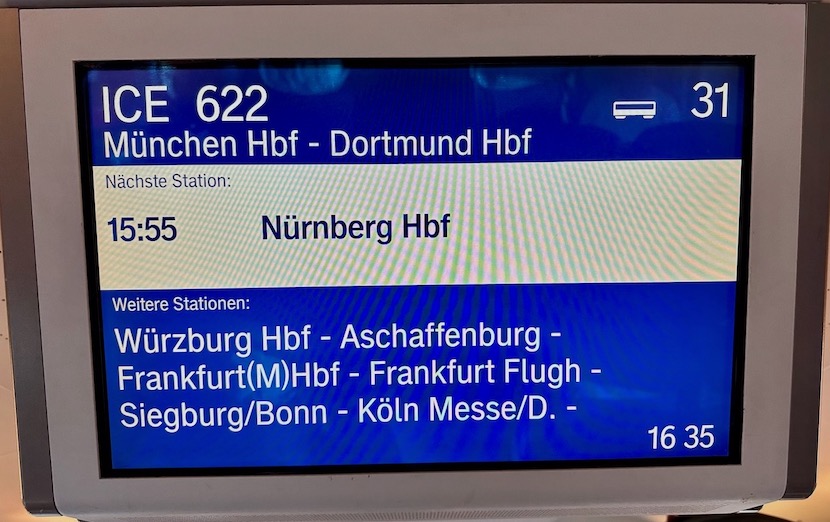

This means, it displayed an “upcoming” arrival from the past and the current time at the same time. I took an image of the display that lived in its own reality:

The white bar in the upper half of the information display shows that we would arrive at the next station (“Nächste Station” in German) at 15:55 which would be Nuremberg (“Nürnberg”). At the same time, the display showed the current time 16:35 in the lower right corner.

The system was able to display the current time, i.e., the current time was available to the system. Why didn’t it use this information to cross-check its internal state? It would have been immediately obvious that its current state did not reflect reality, i.e., that it is out of sync with the real world. 1

Based on its current implementation, the system is not dependable, i.e., people cannot depend on it. Additionally, as the system showed obviously contradicting information without noticing it, it also undermines future trust in the system.

Actually, at some point in time the information system started to show the right next station that we would arrive at. Probably, the train staff noticed the flaw and they manually updated the state of the system. Still, nobody who observed the flaw – including me – relied on the displayed information anymore.

A car in the river

The second experience was with my car. A just got a new car. It is not a big, fancy car. Still, it has lots of software in it as most new cars have it these days. One evening, I took a trip to Cologne to meet an old friend. So far. So good. But when I drove home, the car lost track of where it currently is. While I was heading North in Cologne, the car software was convinced, the car was going East. 2

As a result, the map showed that I was driving on the Rhine – or in the Rhine? This could have been the first reality check for the navigation software: Cars do not tend to drive on or in rivers. As long as you do not own an amphibian vehicle (which my car definitely is not), car owners try to avoid driving in rivers by all means.

Eventually, the software realized that something seems to be odd and tried to place my car back on a street. For a while, it sent me further East. Then, something even stranger happened. The software decided, the car was at a completely different location going South (while I still was going North most of the time).

As a consequence of this arbitrary car relocation, based on the map, I drove straight through Phantasialand, a big amusement park located south of Cologne. In reality, I drove on a regular street on my way home. This could have been another hint for the car software: Cars do not drive through amusement parks – especially not at 50 km/h.

Then again, the software tried to place the car back on roads while switching between going South and West (while I was going North and East).

Now, things started to turn from confusing to annoying because I meanwhile was driving on a Highway. But the software assumed the car was driving through residential streets and regular city streets and some “smart” features of the car software started to “shine”:

The map includes speed limit information and my car displayed the speed limits based on the roads, the software assumed the car to be on. This resulted in situations when I was driving on a German highway while the car was constantly warning me that I exceeded the 30 km/h that were allowed in that street it erroneously thought the car were in. It also gave other kinds of odd hints and warnings.

At the same time, my car has a traffic sign detection built in which recognized the traffic signs along the highway I was at: 100 km/h, 120 km/h, 80 km/h, no speed limit, and so on. That section of the highway has a lot of speed signs. So, there was a lot of information the traffic sign detection picked up.

This made the situations even more confusing: Whenever the traffic sign detection picked up a speed limit sign, the car used that as the current speed limit reference: No warnings. Then the navigation software picked up a speed limit from the imaginary street it thought I was driving on: Warnings. Then the traffic sign detection picked up another speed limit sign … and so on.

The car software jumped arbitrarily between real and imagined speed limits and based its hints and warnings on them: 100 km/h, 30 km/h, 120 km/h, 50 km/h, 120 km/h, 30 km/h, 100 km/h, and so on. Luckily I switched off all the hard warnings upfront like sending acoustic warnings if you exceed the speed limit by more than 10 km/h or alike. If I would have switched them on, I probably would have returned the car to the dealer the next day.

Eventually, I safely arrived back home, ignoring all the hints and warnings coming from my car. Luckily, I did not need navigation for that trip. I would have been in deep trouble. This way, it was just annoying.

But more importantly: The car software received a lot of information from the traffic sign detection which massively contradicted the information it got from the navigation software. One mismatch may be a detection error. But 10 signs in a row? The car software got dozens of hints from the traffic sign detection and other means that its navigation software was detached from reality.

E.g., it is more than unlikely that I drive 120 km/h in a street that is restricted to 30 km/h because it is technically impossible to constantly drive 120 km/h for a longer period of time in a 30 km/h residential area street. Also the fact that the car drove through buildings all the time on the map and jumped from one location to the next would have been clear indicators that something must be off.

Why didn’t the car software use all those hints to cross-check with the navigation software and to realize that the car software has a problem? This time, it was only annoying. In other settings, the effects of letting an obviously failed system continue to run and trigger actions could have had worse effects.

The Reality check pattern

When I observed the second incident (actually the train incident was the second incident), I started thinking because in its core, it was the same failure pattern:

- A system that must be tightly bound to reality to be useful is detached from reality.

- As a consequence, it shows the wrong information, sends the wrong signals and triggers the wrong actions.

- It has access to information that clearly reveals that it currently is detached from reality.

- But it does not use the information to do a “reality check”.

- Instead, it continues living in its own reality, showing wrong information and sending wrong signals.

When putting my resilience and dependability hat on, a resilient software design pattern emerged in my head. Here is my first attempt to write down its essence:

… a system is bound to its encompassing reality, i.e., the information it emits and the actions it triggers only make sense if the state of the system matches its encompassing context. Otherwise, its informations and actions are pointless in the best case and harmful in the worst case.

***

Due to software bugs or other unexpected problems it can happen that the internal state of the system does not match the state of the encompassing world. This needs to be detected and the system should be put into a safe mode where it cannot create any confusion or harm until the internal state matches the state of the encompassing world again.

Therefore:

Validate the internal state of the system by cross-checking with other information sources that are also bound to reality. If the information does not match, put the system into a safe mode where it cannot emit any confusing or harmful information and actions.

Of course, this pattern needs some more discussion, e.g.:

- If I only have two systems that contradict each other, which of the systems should I put to safe mode?

- How much proof of a detached reality do I need before I put the system to safe mode?

- What are useful indicators of a reality detachment?

So, this pattern still needs some more work. But its core idea already becomes clear and it would have led to very different experiences regarding the dependability of the two systems I described.

In both situations I experienced, the systems obviously completely failed to question their internal state at any point in time. From a resilience and dependability perspective this is bad design. A system thats viability depends on being in sync with the external reality should regularly check if its internal state still is in sync with the outside world. Neglecting to do so results in confusion, harm or worse.

Just assume, my car would not simply have annoyed me with warnings that I would drive too fast. Instead, it would have enforced the speed limit by slowing the car down to 30 km/h on a highway. That would have been really dangerous. And I am sure that during the design of the car software, this option has been discussed.

There tends to be so much blind faith in the correct functioning of software that people rarely consider the consequences of features if the software should not work as expected.

This becomes an even bigger problem because we enter the safety-critical domain more and more often. E.g., the car navigation software is at the boundaries of safety. All these pointless warnings and the wrong information that continuously popped up was highly distracting which is on the verge of being harmful.

The problem is that those software systems are developed more an more often like traditional enterprise software, staffed with traditional enterprise product managers, enterprise software engineers and so on. While (most of) those people know how to write solid enterprise software, they tend to be notoriously bad regarding safety. If you combine this lack of safety knowledge with blind faith in the correct functioning of the software, bad things are just around the corner. 3

Summing up

I had some odd experiences where software that needs to be in sync with its surrounding reality was out of sync and thus behaved in confusing or annoying (luckily not harmful) ways.

In both cases, the software could have noticed its detachment from reality but failed to do so. Instead, it sort of insisted in its own reality, this way not being dependable. In the worst case, such a behavior can lead to harm or worse, especially these days as IT and OT grow together more and more tightly.

Based on the observation, I derived a pattern-based description of how such systems need to check if they are still in sync with their encompassing world and what they need to do if they are not.

Probably the pattern is not new but already exists under a different name, and I simply do not know it. But observing how the software simply continues living in its own world detached from reality while still sending signals to the rest of the world causing confusion, harm or worse, I think we desperately need to implement this pattern more often, however it is called.

Hence, if you work on such a system, maybe check if you implemented such a reality check. And if you know someone else who works on such a system, maybe ask them if they implemented the reality check pattern. Software dependability becomes more and more vital. Thus, better let us make sure the systems we create are dependable …

-

For those people using German trains regularly: The train was (almost) on time. At the time I took the picture, we had left Nuremberg for more than half an hour. Additionally, the information system did not have any delay information at that time, i.e., it showed that we would arrive at Nuremberg at the regular time. ↩︎

-

GPS did not have a problem. I checked with my mobile phone when I arrived at home and it immediately showed the right location while my car navigation software still was lost. Maybe the car had a technical problem that kept it from picking up the GPS signal. But then again, it should be able to detect the missing signal and respond to it, e.g., by deactivating the navigation software and all the signals it sends (like the wrong speed limit warnings, see text) until the connection to GPS is restored. ↩︎

-

I have to admit, that I am quite worried observing this trend to put more and more half-baked software (from a dependability perspective) into safety-critical environments. Based on the blind faith, many product managers and software engineers seem to have in the flawless functioning of their software, it only seems to be a matter of time until this kind of software sips into central, safety-critical control parts of physical systems like into steering, accelerating and braking. ↩︎

Share this post

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Pinterest

Email