The reusability fallacy - Part 3

Why the reusability promise does not work

The reusability fallacy – Part 3

In the second part of this blog series about reusability I discussed the costs of making a software asset reusable. It turned out that creating an actually reusable asset means multiple times the costs and efforts compared to creating the same asset just for a single purpose.

Additionally, we have looked at asset properties that work as reusability promoters or inhibitors to understand which functionalities are worth being made reusable and which are not. It turned out that almost everything worth being made reusable already exists – typically as part of the programming language ecosystems or an OSS solution. On the other hand, the architectural paradigms that are usually sold with reusability as huge cost saver typically target the functional domains that offer little reuse potential.

Distributed systems as “reusability enablers”

In this post I will start with a discussion why reusability in distributed systems is a false friend. The problem is that most of the architectural paradigms that are sold with “reusability” result in distributed systems, i.e., systems, where the different parts – especially the “reusable” ones – live in different process contexts and use remote communication for interaction.

It started with the Distributed Computing Environment (DCE) in the very early 1990s. A bit later in the 1990s, the next big hype was the Common Object Request Broker Architecture, better known as “CORBA”. When that hype faded, we had language- or platform-specific approaches like Enterprise JavaBeans (EJB) or Microsoft’s Distributed Component Object Model (DCOM), before the Service-Oriented Architecture (SOA) caused the next big hype in the 2000s. The latest paradigm “Microservices” became popular in the mid 2010s.

All these architectural paradigms (and probably some more I forgot to list) were – and still are – sold with reusability as one of the big productivity drivers and cost savers (see the fallacy in the first post of this series). All of them also result in distributed system architectures.

Distributed systems are hard

Therefore, it makes sense to have a quick peek at the characteristics of distributed systems. The shortest “definition” I know about distributed systems is:

Everything fails, all the time. -- Werner Vogels (CTO Amazon)

This statement feels a little bit pessimistic. Why does the CTO of a company that has proven to know how to build distributed systems come up with such a statement? The reason is probably rooted in the failure modes of distributed systems. Remote communication exhibits some failure modes that simply do not exist inside a process boundary:

- Crash failure - a remote peer responds as expected until it stops working, neither accepting requests nor responding anymore.

- Omission failure - sometimes a remote peer responds, sometimes it does not respond. From a sender’s perspective the connection feels sort of “flaky”.

- Timing failure - the remote peer responds but it takes too long (with respect to an agreed upon maximum response time). In practice, this tends to be the hardest failure mode as latency usually spreads very fast in distributed systems.

- Response failure - the remote peer responds in time, but the contents of the response are wrong. Besides incomplete or corrupted messages this failure mode is seen often as a result of eventual consistency, i.e., if an update has not yet propagated to all involved peers.

- Byzantine failure 1 - the remote peer runs riot and you have no idea, if, when and what it will respond, if it will be correct or wrong, etc. This is a very nasty failure mode that not only occurs if systems are under attack (e.g., a denial-of-service attack). You need a special class of algorithms to detect and handle Byzantine failures.

As written before, these failure modes do not exist inside process boundaries. Unfortunately, most of our computer science education (also in our professional career) silently assumes inside process communication: calls to other peers always respond instantaneously and always provide correct results (at the technical level).

As a consequence, the thinking model of software engineers is sort of “If X then Y”. Everything is deterministic. We create our designs, our algorithms, our code assuming deterministic behavior everywhere.

But due to their failure modes, distributed systems act more like “If X then maybe Y”, i.e., there is always a chance that things go wrong, and we cannot anticipate when and how things will go wrong. At the application level this results in effects like:

- Lost messages - requests never reach the recipient, responses never reach the caller, messages or events get lost on their way.

- Incomplete messages - only parts of the message are delivered to the recipient.

- Duplicate messages - the same message arrives two or more times at the recipient. 2

- Distorted messages - the contents of the message are corrupted partially or completely. While the latter usually is easy to identify, the former sometimes can go undetected and cause faulty behavior.

- Delayed messages - messages only arrive very slow. If not detected and handled early can lead quickly to a complete application stall or worse if all threads of the processes involved get blocked waiting for the arrival of messages.

- Out-of-order message arrival - messages arrive in a different order than they were sent. This typically becomes problematic if the messages are causally dependent. E.g., if in banking the withdrawal message arrives at the receiver before the deposit message even if the sender sent it in the other order, the withdrawal can get declined even if it was perfectly covered by the deposit.

- Partial, out-of-sync local memory - a distributed system does not have a perfectly synchronized global state across all nodes. Each node has its own view on the global state which is more or less in sync with the (theoretical) global state. This is an effect of message loss, out-of-order messages, etc. As a result, if a part of a distributed system triggers an action based on its current state, it can turn out in hindsight that it was wrong (due to delayed state updates that arrive only after the action).

- and many more …

For those who think they are save because they use some asynchronous communication means like a message queue: these effects are independent of the actual communication style. They occur whenever remote communication is involved. 3

I will discuss the effects of going distributed in a lot more detail in later posts. For the moment, it is sufficient to understand that remote communication adds a probabilistic element of unreliability to the communication that cannot be anticipated, but hits you at unexpected times and often even in unexpected ways.

Reusability means tight coupling

If we combine the imponderabilities of distributed systems with the concept of reusability, we notice an additional twist.

To increase the availability in a distributed system, you need to avoid cascading failures. A cascading failure is a failure that happens in one system part and that causes a failure in another system part, i.e, it cascades from one part to another.

To avoid cascading failures you try to isolate the different parts of a distributed system from each other. Isolation means that a failure in one part can be contained in that part and does not cascade to another part. This way, most parts of the systems remain functional and from the outside you only experience a local, partial failure.

On a functional level isolation means that you try to couple the parts as loosely as possible. The less functional dependencies you have between two parts of a system, the smaller the likelihood that a failure in one part affects the other part.

It is often forgotten that loose functional coupling is a prerequisite for working isolation. There are a lot of options on the technical level to detect and recover from (or at least mitigate) partial failures, but without loose coupling on the functional level it usually is not possible to isolate a partial failure.

If we look at reusability, we notice that this is the tightest form of coupling possible: If the reused part is not available, the reusing part cannot complete its work by definition. Thus, aiming for reusability in a distributed system means introducing cascading failures by definition, i.e. compromising availability by design. 4

Additionally, a design for reusability tends to lead to long activation paths. The activation path is the number is internal remote calls, a distributed application needs to execute to respond to an external request. If you go for reusability, your dominant train of thought at design time is to find potentially reusable parts that you can factor out. This leads to long call chains which in case of distributed systems turn into long activation paths.

Hence, a design for reusability also tends to lead to bad response times. This is also true if you use message or event driven communication. The external caller waits for a response, i.e., the request has synchronous properties, no matter how you implement it on a technical level. 5

Avoid reusability across process boundaries

The previous section can be summarized like:

Aiming for reusability in a distributed system compromises system availability and response times by design.

Therefore, you should not aim for reusability in the design of distributed systems like, e.g., going for reusable services. Reusability compromises loose functional coupling which is the prerequisite for robust, highly available and fast service designs.

Instead of reusability you should better strive for other concepts like replaceability. Replaceability means that you can replace the implementation of a service without affecting other services. While this is not sufficient to ensure loose coupling, it is a step in the right direction. 6

You will still find functionalities in distributed environments that you want to reuse. But to not compromise availability and response times, you should rather try to implement them in a way that both reusing and reused part live in the same process context. E.g., libraries offer a good option to achieve this.

Inside a process boundary, reusability still leads to very tight coupling, but it does not harm you: if the reused part fails due to a process crash, the reusing part is also dead because it also lives in the just crashed process. Thus, from a logical point of view, it cannot happen that the reused part fails in a non-deterministic way from the reusing part’s perspective.

To wrap up this consideration:

The value of reuse in distributed systems unfolds inside a process boundary, not across service boundaries.

Thus, selling a new distributed architectural paradigm with “reuse” leads the wrong way and often results in brittle and slow solutions.

Reusability vs. usability

“But”, you might say, “there are all those business services that I need to reuse. Take the accounting service as example. Shall I really put accounting into a library and plug that library into every single service that needs to make an accounting transaction? You can’t be serious!”

While it is true that it usually does not make a lot of sense in a service-based architecture to put the whole accounting functionality in a library and plug it into every service that needs it, the statement still misses the point, because it does not reference reusability, but what I tend to call “usability” 7.

As the difference between the two is subtle, but essential and as the two very often get confused, I try to clarify the distinction here.

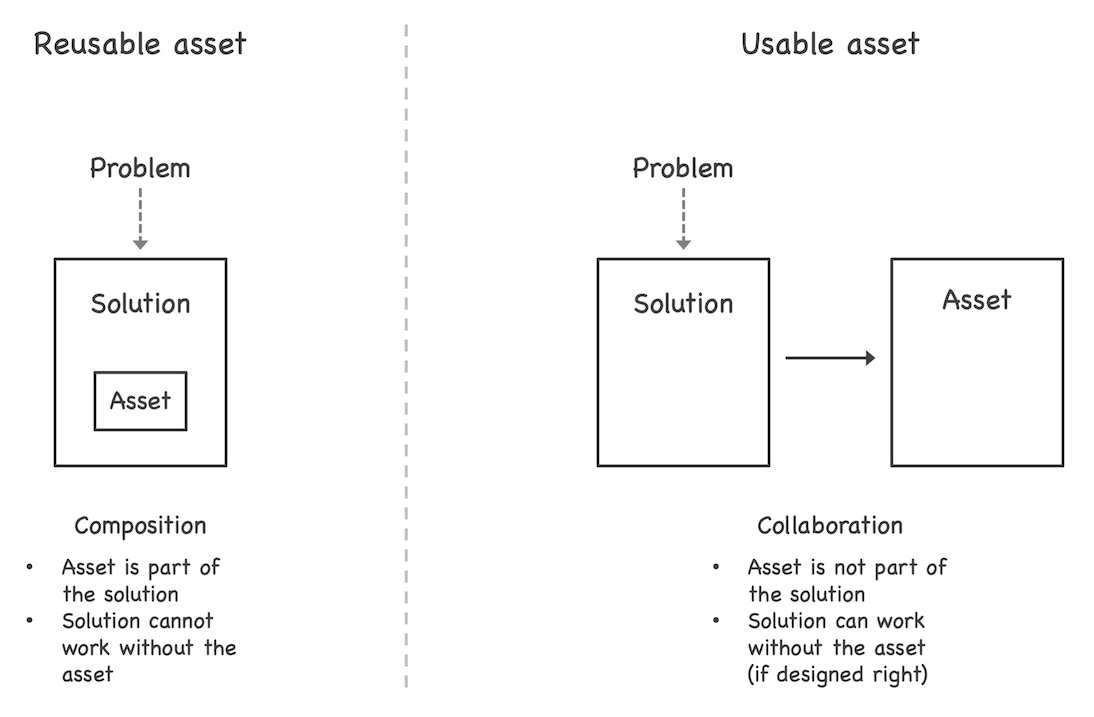

Reusability is based on composition. The reusable asset is part of the solution you create to address a given problem. E.g., you need to create a text processor. Part of the solution are text buffers. As you need text buffers in multiple parts of your text processor, you might decide to create a reusable implementation (or use an existing reusable implementation).

An important property of reuse is that the reusing part – here the text processor – does not work without the reused asset – here the text buffer. The reused functionality is an integral part of the solution that (re-)uses it.

Usability on the other hand is based on collaboration. The usable asset is not part of the solution you create to address a given problem. E.g., if we stick with the text processor, you might want to attach an external grammar and style checking service (like, e.g., Grammarly). The checker is not a part of your text processor, but an asset your solution collaborates with.

If the used asset does not work, your solution can still work – if you designed the collaboration correctly. An important aspect of a usability dependency between two assets is their functional independence. They fulfill independent tasks. It is not that one is needed for the other to fulfill its task. That would be reuse.

Prefer usability across process boundaries

Coming back to the accounting service example: based on the distinction made in the previous section, an accounting service is a usable asset, not a reusable one. Another service, e.g., a checkout service of an e-commerce solution, could complete its task without the accounting service. Of course, it eventually needs to send the transactions made to the accounting service. But if the accounting service is not available, it could buffer the transactions and send it later when the accounting service is available again.

The two services could also be connected via a message queue or alike and the accounting service consumes the transactions of the checkout service in a completely asynchronous fashion. The key point is that with a usability dependency between the services, you can implement the checkout service in a way that it can complete its external request (checkout for a buying customer), even if the used accounting service is not available.

A counter-example: Take a typical partner system/service implementation, e.g., in an insurance context. This is a reuse dependency. Whenever, e.g., you try to file a claim, the claims service will need to access the partner service to retrieve the required client information. If the partner service is not available, the claims service cannot proceed, i.e., on a functional level, it is also unavailable.

You might call this distinction hair-splitting and argue that what I call “usability” also is reusability because a usable asset can be used from multiple places, i.e., is reusable. Additionally, anything that is reusable must be usable in the first place (using a different definition of “usability” – see my footnote about “usability”). Thus, does the distinction make sense?

Based on my experiences with CORBA, EJB, SOA, microservices and more in the last 25+ years, this distinction makes a lot of sense. While I admit that the distinction seems subtle, might not be easy to grasp and definitely is hard to master, the consequences are grave.

The solution designs and the resulting runtime properties you get from applying the one or the other are fundamentally different. Design for reusability will result in brittle and slow solutions that are an operations nightmare at scale. Design for usability (i.e., functional independence) leads a path towards robust, responsive and highly available system landscapes that scale in a nice way. 8

And again, what is left for reusability

In this post, we have looked at the price you pay for reusability in distributed systems because most paradigms that are sold with the promise to amortize via reusability are distributed (e.g., DCE, CORBA, EJB, DCOM, SOA or microservices).

We have seen that reusability in such distributed systems is a false friend because reusability leads to very tight coupling. If we combine tight coupling with the imponderabilities of remote communication, we end up with brittle and slow solutions. Thus, reusability should be avoided across process boundaries (e.g., between services).

We also discussed the subtle, but essential distinction between “usability” and “reusability” which are often mixed in arbitrary ways. Based on my perception this confusion is one of the main reasons of the whole reusability fallacy. The problem with confusing the two is that especially in distributed system design reusable assets result in very undesirable system properties while usable assets that were designed with functional independence in mind lead to desirable system properties. Thus, understanding that distinction is fundamental.

To sum the ideas of this post up:

Reusability in distributed system design is a false friend. Avoid it.

Focus on reusability inside process boundaries instead.

Go for usability (i.e., functional independence) across process boundaries.

In the next and last part of this little series, I will sum up what we have discussed so far and then then give a few practical recommendations, when and where to aim for reusability, when to avoid it and what to do instead.

I will leave it here for this (way too long) post and hope it contains some food for thought. Stay tuned for the next and last part.

[Update] September 15, 2020: Added delayed messages as possible effect of distributed failure modes at the application level. Added description of “backup request” pattern as new footnote #4.

-

The odd sounding name of the failure mode is derived from the title of the first (or at least most popular) paper that describes this failure mode: “The Byzantine Generals Problem” by Leslie Lamport, Robert Shostak and Marshall Pease, originally published 1982 in the ACM Transactions on Programming Languages and System. ↩︎

-

It is relatively simple to implement an “at least once” delivery, i.e., that a message is delivered exactly once most of the times, but more than once sometimes. It is also relatively simple to implement “at most once”, i.e., that a message is delivered exactly once most of the times, but is not delivered sometimes. But it is basically impossible to implement “exactly once delivery”, i.e., that a message gets delivered once and only once under all conditions – at least if you want to guarantee progress, i.e., that you do not want to wait arbitrarily long if something goes wrong. This is scientifically proven for many years and unless some laws of physics or the way we do remote communication does not change fundamentally, it will not change, no matter what some tool vendors try to make you believe. ↩︎

-

Also the common response “But those effects occur so rarely that we can safely ignore them” is not useful. The probability that a failure will occur is higher than zero. This means, the question is not, if the effects described occur but only when they occur. If your application is not prepared to handle them, it will crash in the best case. In the worst case, it will do something completely wrong that nobody notices but will affect your customers. To make things worse, those failure-related effects tend to occur with a much higher probability if your application is under high load, i.e., makes a lot of money, supports your customers at some critical work or alike. This is the most undesirable point in time for struggling with strange and hard to debug problems. Still most application implementations do not consider those effects of remote communication. Based on my experiences, most applications, incl. today’s fashionable microservice-based solutions, are written in a way as if remote communication behaves exactly like local communication, i.e., is 100% reliable, fast, no out-of-order issues, perfect global state, etc. – which is not true. ↩︎

-

In theory (and sometimes also in practice) you can launch several replicas of the same service and then implement some proxy either in the calling service or in front of the replicated services that watch the request processing duration. If it takes too long (timeout 1), the proxy sends the same request to a different replica trying not to exceed the overall time span allowed for the processing of the given request (timeout 2). This is basically the idea of the “backup request” pattern as Jeffrey Dean and Luiz André Barroso describe it in their paper “The tail at scale” (they use a bit different terminology, but it is what became better known as “backup request” pattern). Still, be aware that this means that you multiply the resources, code and infrastructure complexity needed to provide the required availability, which you would not need if you would design your application better on a functional level. Or in harsher words: You waste time, efforts, money and resources because to cover your functional design deficiencies. Personally, I think that is a poor trade – even if I see it over and over again (to be frank: This is the recurring theme of IT for the last 50 years, trading efforts and resources for poor designs – not only in the context of microservices). ↩︎

-

Many people confuse logical and technical communication patterns. You can implement asynchronous behavior using synchronous technical means and vice versa. You can implement events on a logical level using REST on a technical level. You can implement RPC on a logical level using a message bus on the technical level. And so on. This is a common source of confusion and often leads to inconsistent design decisions. I will come back to that topic in a later post. ↩︎

-

I will write in more detail how to ensure loose coupling on a functional level in some later posts. If you do not want to wait for those posts, you might want to have a look at this slide deck. You can also find a recording of an abridged version of the talk here (I only had 45 min for a 60 min talk, thus I had to cut a few corners). People who understand German alternatively can watch an early complete version of the talk here. ↩︎

-

Being a quite generic term in the English language, “usability” has multiple definitions. Here I use it in the context of dependencies between building blocks of a software solution. Admittedly, I am not sure if the term is chosen optimally because even in that context “usability” has more than one meaning. Still, I decided to use it due to the lack of a better term. If you know a better term that describes a usage relation between two assets that are functionally independent, please let me know. ↩︎

-

Actually, it is a bit more complicated than I described it here. You can create reusability without composition. But as most people struggle to make that distinction and thus tend to confuse it, I only look at compositional reusability here. Also, a lot more things must be done right to build a system that is robust, highly available, responsive and potentially scalable. But if you do not design for usability (i.e., functional independence of the assets), you preclude this option by design and no technical measures will get you there. ↩︎

Share this post

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Pinterest

Email