Why we need resilient software design - Part 1

The rise of distributed systems

Why we need resilient software design - Part 1

In this post series, I will discuss what resilience means for IT systems and why resilient software design has become mandatory.

In one of my previous posts, I discussed why I think resilience has become the probably most important paradigm of the 21st century. Still, the examples used mostly were about people and organizations. Thus, you might ask yourself if resilience is also as relevant for IT system design.

The short answer is: Yes, it is.

But it probably the reason for it is a bit different than you might think and it takes a little time to explain it. That is why I split it up in a little blog series:

- The rise of distributed systems (this post)

- The effects of distribution

- Why your systems will fail for sure

- Why failures will hit you at the application level

- The need for resilient software design

Let us start with a look at the past and the evolution of enterprise system landscapes.

Good ol’ times

Put simply, resilience is about keeping up correct operation in the face of unexpected (external) adverse events – or at least recovering from them in a timely manner, while offering a gracefully degraded service in the interim. 1

In the past, we left such availability issues to our operations teams. They ran our applications on some kind of high availability (HA) infrastructure: Dedicated HA hardware, software cluster solutions, multiple replicas of our applications behind a load balancer, or alike.

Hence, as developers we were able to ignore most resilience issues. We only had to make sure not to implement any software bugs that let the application crash or return wrong results.

Maybe we had to adhere to some rules, operations imposed on us to make sure the application worked on their HA infrastructure solution. E.g., we were only allowed to add serializable data objects to our session state in order for the application servers to replicate the state between instances as needed.

But that was basically it. Besides these few potential limitations needed for the infrastructure HA solutions to work properly, we were able to develop our software as if it was running in a single process on a single machine that will never, ever fail.

Good ol’ times … ;)

Based on those habits from the past, we still see a lot of people in software development asking why operations cannot take care of the resilience needs and let developers “focus on their work”. Or they do not ask but develop their software the same way they always did – as if the solution were running in a single process on a single machine that will never, ever fail.

And some of the recent infrastructure developments seem to justify such a behavior:

- Container schedulers making sure a specified number of replicas is always running, offering auto-scaling features, etc.

- Service meshes offering timeout monitoring, automatic retries, advanced deployment and load balancing strategies, etc.

- API gateways taking care of rate limiting, failover, etc.

- And more …

Thus, it seems that software developers these days can safely return to their “let ops take care of resilience” habit of the past, even if there were that dreadful period in the beginnings of the microservices movement 5-10 years ago when they needed to take care of such “distracting annoyances”.

Back to good ol’ times … ;)

Unfortunately, this is a misconception.

It is not that easy anymore, and probably will never become that easy again.

The road to distributed everything

But why is it this way?

It has to do with distributed systems and their effects.

For a mostly monolithic and isolated system this reasoning might work: We could leave taking care of basic availability to operations. They would run our application on their HA infrastructure of choice and everything would be okay. The application availability would be good, provided that we as developers did a good job and did not deliver an application with lots of bugs, bad response time behavior or any other development-related issue.

Unfortunately, this situation is gone for a long time. Mostly monolithic and isolated systems are a thing of the past – at least in enterprise software contexts. As Chas Emerick stated in his presentation “Distributed Systems and the End of the API”:

(Almost) every system is a distributed system. – Chas Emerick

The software you develop and maintain most likely is part of a (big) distributed system landscape.

It was not always that way and the changes happened gradually, silently, in a way that could escape you for a long time until you eventually realized that things had changed completely.

I remember when working as data center operator in the early 1980s that the company I worked for employed a fleet of data typists. Their only job was to take the output from one application and enter it into another application. 2

Eventually, the data typists were replaced by batch jobs: The one application exported its data to a file via a batch job and the other application imported it via another batch job. These already were some interconnected systems and you could call it sort of a distributed system landscape.

But because the batch processing temporally decoupled the applications, the harmful effects of distribution were mostly mitigated. If something went wrong, you usually had more than enough time to fix the problem and rerun the batch jobs. Usually, this was done by the operations team. Developers were not affected unless they implemented some nasty bug into the application that caused the problem and they needed to fix immediately.

And then RPC and all its descendants to exchange data online became popular. And that changed everything because with online communication we arrived at what we usually have in mind when talk about distributed systems. 3

Of course, it was not an overnight change. It started slowly, just a few connections, still with a batch update backup. Then some more connections. Then more pressure from the business side wanting quicker updates across application boundaries, resulting in some more online connections. And so on until batch updates mostly became a faint memory only used in conjunction with so-called “legacy systems”.

More peers …

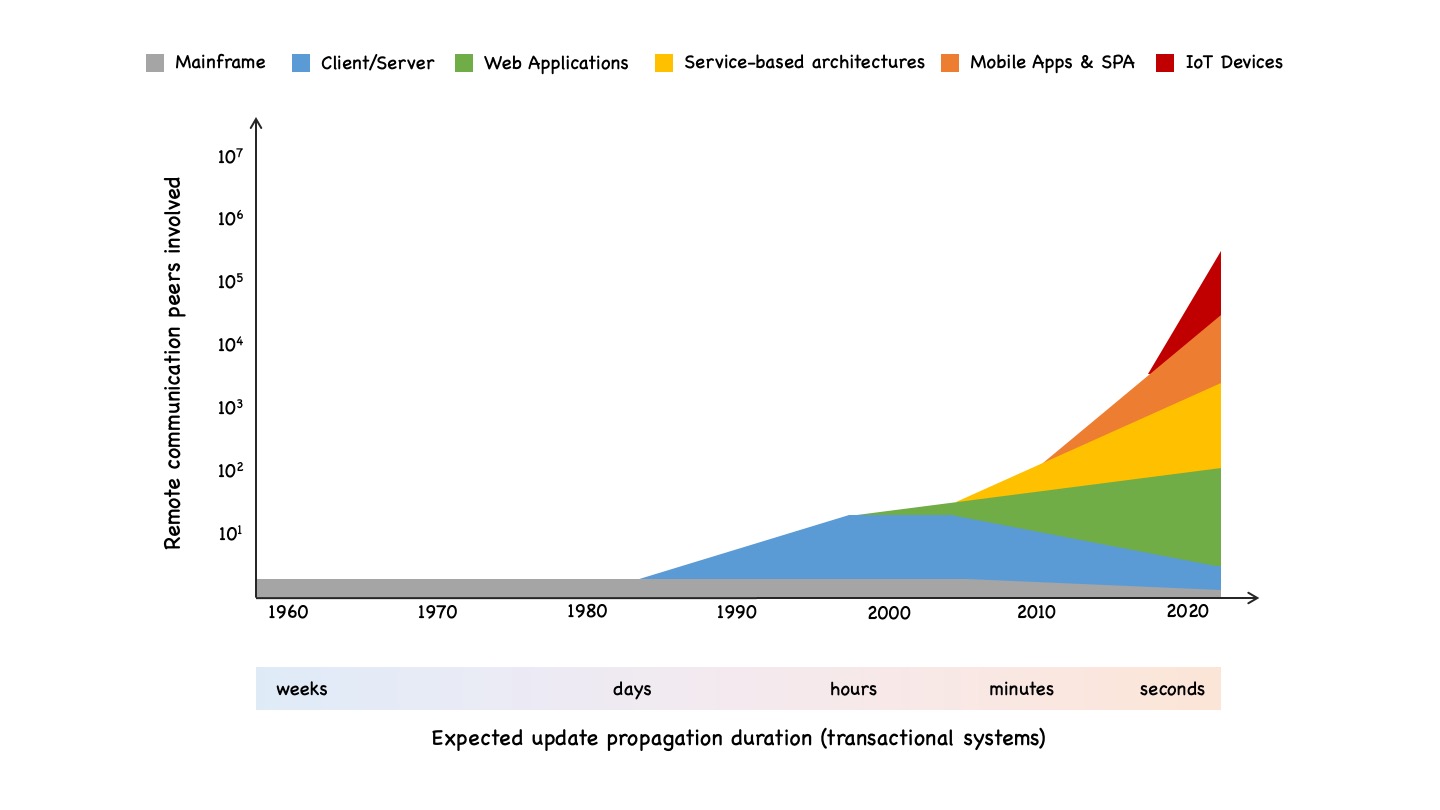

Let me discuss this evolution in a bit more detail: It started with mainframe computers. Usually you had one of those machines. And if you had a second one, it was completely opaque for the developers. For developers, there was a single big machine that ran their programs – being it batch jobs or online transactions 4.

From the perspective of a developer everything ran on a single machine and the infrastructure components took care of copying data between application parts if you were using a transaction monitor like, e.g., CICS.

In the 1980s, the client/server paradigm became popular in conjunction with microprocessor-based servers, usually running a UNIX variant, and the rise of LANs and PCs. Instead of one computer, now there were a growing number of servers that exchanged data. And with the rise of RPC and distributed communication standards like DCE or CORBA in the early 1990s, online communication between these server machines and the clients using them became more widespread.

In the late 1990s, web-based architectures, building on Internet standards (especially HTTP) started to replace client/server architectures, while at the same time the number of communicating server machines continually rose up to several dozen or even more than a hundred peers communicating over network connections.

In the early 2000s, the first wave of service-oriented architectures resulted in another rise of remotely communicating peers due to splitting up big applications in smaller services, up to several hundred or even more than a thousand communicating peers. 5

The next big increase of remotely communicating peers started with the rise of mobile apps and Javascript-based web applications in the late 2000s and early 2010s, later leading to architectures like SPA. Many new peers, often communicating over quite unreliable mobile connections became part of the distributed system landscapes.

The newest increase of remotely communicating peers is due to the rise of IoT and the tightening connection between IoT and enterprise software systems. This can lead to several 10.000 and more remotely communicating peers that all exchange information online.

And most likely, the number of communicating peers will grow further in the future.

… faster update propagation …

At the same time, the expectations of updates propagating through the system landscapes also continually rose. While it was okay that updates might take a week or longer to propagate through the transactional system landscape until the 1980s, the business departments then started to expect that updates did not take longer than a day or two in average.

In the early 2000s, the update propagation duration expectations were down to hours. This was the time when batch jobs often were executed on an hourly basis or more often to satisfy the demands.

But when the expectations were down to minutes in the late 2000s, more and more frequent batch jobs did not do the trick anymore. This was the time when online updates between applications became mandatory.

Meanwhile, the update propagation duration expectations are down to seconds. Actually, the typical expectation is “immediate”, but a few seconds of delay are usually (but not always) grudgingly tolerated.

For analytical systems, the evolution was a bit slower. For a long time, it was still okay that it take a week or longer until updates in transactional systems reach the analytical systems. Even today, a delay of a day or longer is still accepted in many places.

On the other hand, companies that actively use data for (fast) decision making, meanwhile shortened their feedback loops between transactional and analytical systems down to seconds, with the boundaries between those system types dissolving.

So, it can be expected that the update propagation duration expectations for analytical systems will become the same as for transactional systems in the next years.

… and never down

Finally, it should be mentioned that the uptime expectations also grew over time. For a long time it was normal that online transaction systems are only available at (slightly extended) office hours and the night belonged completely to batch jobs and regular system maintenance.

With the rise of the World Wide Web and customer web applications, the demand for 24x7 system availability grew. For quite some time, these 24x7 availability expectations were limited to the (relatively few) customer-facing systems.

The backend systems still were not available overnight. E.g., if a customer placed a request at 8pm, it would not be processed before the next morning because the required backend systems were not available. The customer-facing systems were available 24x7, but overnight they acted like mere data caches and request buffers for the backend systems.

Eventually, the customers expected higher availability. They did not care about batch windows or alike, especially because new Internet companies raised the availability bar, delivering 24x7 full services.

Meanwhile, basically everywhere users expect 24x7 availability of the systems they use, no matter if in a B2C (Business-to-Consumer), B2B (Business-to-Business) or B2E (Business-to-Employee) context.

They do not only not accept batch windows anymore. They also do not accept scheduled maintenance windows anymore, be it for software deployments that require downtime or whatever.

We still see a few companies that schedule maintenance windows, but they are getting fewer all the time. Still scheduling maintenance windows means your IT infrastructure and processes are at least 10 years behind – and who wants to publicly display that their IT is far behind state-of-the-art? 6

Summing up

We discussed the stepwise journey from isolated monolithic applications to distributed system landscapes where applications continually communicate with each other. We also saw that the number of peers involved continually grew (and still grows), while the update propagation duration expectations became shorter and the availability expectations went to “never down” at the same time.

Today, we are faced with complex, highly distributed, continually communicating systems landscapes that have to be up and running all the time. Additionally, many peers and the processing running on them live outside the boundaries of the infrastructure we control, e.g., mobile apps, SPAs and IoT devices. They run on user devices and communicate over (sometimes quite unreliable) public network connections.

In the next post, we will discuss what all this means for the failure modes that can occur and what their concrete consequences are regarding application behavior. Stay tuned … ;)

-

If that (a bit informal) definition reminded you a lot of fault tolerance: In the previous post, I discussed the relation between resilience and fault tolerance. ↩︎

-

If you ask yourself why the company did not simply write some batch jobs to copy the files: Compute resources were scarce and very expensive. Disk storage was very scarce and extremely expensive. If you needed to persist some data, you usually used sequential tapes because they were a lot less expensive. But then you only had some many tape drives and as most batch jobs needed some tape drives, tape drives were a scarce resource, too. Hence, it was economically feasible to employ people to take the output of one application and enter it into another application. With growing disk storage capacity and falling storage prices it eventually became a lot more feasible (and less error-prone) to use batch jobs to copy the data. ↩︎

-

I still need to write some foundational posts about distributed systems and their effects – especially to be able to reference those posts whenever needed. Until then, you may want to have a look at “Distributed systems” by Steen and Tanenbaum, a distributed systems literature classic. ↩︎

-

Note that “online” and “transaction” in the context of a mainframe does not necessarily mean what you think it does. “Online” simply meant “interactive”, i.e., that users were able to interact with those programs via a terminal. “Transaction” was more of a logical concept describing a single user interaction. Still, as most of those user interactions involved reading or writing some data from/to a database, it usually also comprised some kind of database transactions on the technical level. ↩︎

-

Actually, CORBA already suggested splitting up big applications in smaller parts, called “objects” in CORBA because CORBA adhered to the object-oriented paradigm. But CORBA never became remotely as successful as SOA and thus had a much smaller impact on the IT landscapes of companies. ↩︎

-

This is not necessarily the “fault” of the IT department. Sometimes the laggards who impede modernization sit in the IT. But quite often IT only gets money for implementing new business features – based on the misconception that only business features deliver business value and neglecting that a competitive IT infrastructure that supports state-of-the-art software development, deployment and operations processes also creates business value. But that fallacy is the subject of a different post. ↩︎

Share this post

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Pinterest

Email