Why we need resilient software design - Part 3

Why your systems will fail for sure

Why we need resilient software design - Part 3

In the previous post, we discussed what distributed systems mean in terms of failure modes that can occur and what their concrete consequences are regarding application behavior.

In this post, we will see that these failure modes are not some theoretic concept, but something very real, i.e., that the question is not if your systems will fail but when and how bad they will fail.

Let us start with the “100% availability trap”, a common misconception widespread among those people who have not yet understood that failures in distributed systems are inevitable.

The 100% availability trap

Many people, including many software developers fall for what I tend to call the “100% availability trap”. They assume that all remote peers, including infrastructure tools like databases, message brokers and alike, are available 100% of the time, i.e., that they are never down.

At least that is what their programming logic tells us when they reach out to remote peers. Basically, you do not find any measures in their code to handle situations if the remote peer is latent or does not answer (not even taking response or Byzantine failures into account – see the previous post for more details about failure modes).

Maybe you will find a half-hearted handling of the inevitable IOException if a remote call fails. But often you only find it because the compiler does not allow to ignore the exception (e.g., in Java) and usually it is limited to log the exception somewhere up the call stack and then move on. But some explicit, sensible retry mechanism and some alternative (business) logic how to proceed if the failure persists – typically nil return.

So, the underlying (often implicit) assumption is that there won’t be any failures – and if there should be, the behavior of the system is mostly accidental.

Understanding availability

Unfortunately, something like 100% availability does not exist in IT systems. While this should be clear if you think about it for a moment, most people still struggle to really embrace this idea. So, let us dive a bit deeper into availability and how distribution affects it.

According to Wikipedia, availability is:

[Availability is] the probability that an item will operate satisfactorily at a given point in time when used under stated conditions in an ideal support environment. – Wikipedia

Note that the definition talks about “operate satisfactorily” which does not only include response failures. It also includes all other failure types we talked about in the previous post:

- If a system does not respond, it is not available.

- If a system responds slower than specified, it is not available.

- If a system provides a wrong response, it is not available.

A system is only considered available if it responds according to its specification. And as I already discussed in a footnote of the previous post, not explicitly specifying the expected system behavior does not change anything to the better. Instead the expectations will become user-driven and blurry.

And if your users claim that they think your system is too slow, you do not even have a basis for a sensible discussion. Instead you discuss based on gut feelings of your users which leaves you in a very unfavorable position. Hence, I can only recommend to specify your expected system behavior explicitly.

Measuring availability

Availability is typically measured in percent, e.g., “99% availability”. This number describes the amount of time the system can be expected to be available, i.e., operate according to its specification. More formally, this would be:

Availability = E[uptime] / E[uptime] + E[downtime]

where E[…] denotes the expected value of something. Alternatively you can write:

Availability = MTTF / MTTF + MTTR = MTTF / MTBF

where

- MTTF means “Mean Time to Failure”, i.e., the mean time between start of normal operations to the occurrence of a failure.

- MTTR means “Mean Time to Repair/Recovery”, i.e., the mean time between the occurrence of a failure and resuming normal operations.

- MTBF means “Mean Time between Failures” which is the sum of MTTF and MTTR, i.e., the mean time for a whole cycle from the occurrence of a failure over recovery and normal operations to the next occurrence of a failure.

The “nines”

Often, availability goals are described as “nines”, like “3 nines” or “4 nines”. To understand what this means and what different availability goals mean in practice, let us look at a few availability goals:

- 90% availability: 36,5 days downtime per year

- 99% availability: 87,6 hours (< 4 days) downtime per year

- 99,5% availability: 43,8 hours (< 2 days) downtime per year

- 99,9% availability (“3 nines”): 8,76 hours downtime per year

- 99,99% availability (“4 nines”): 52,56 minutes downtime per year

- 99,999% availability (“5 nines”): 5,26 minutes downtime per year

- 99,9999% availability (“6 nines”): 31,54 seconds downtime per year

- 99,99999% availability (“7 nines”): 3,15 seconds downtime per year

- 99,999999% availability (“8 nines”): 315 milliseconds downtime per year

- 99,9999999% availability (“9 nines”): 31,5 milliseconds downtime per year

While there are ATM switches running on Erlang that reached “9 nines” of availability, this most definitely is not the norm. This level of availability was achieved on some dedicated high-availability hardware. We also talk about a single node, i.e., remote communication with its imponderabilities was not involved – which makes a big difference as we will see soon.

When pondering costs and effort of availability, we can state as a rule of thumb:

- Achieving 90% availability is a no-brainer.

- Achieving 99% availability already requires some careful considerations – 4 days of non-availability are surprisingly fast accumulated if you need to take your application down for every release, patch and other maintenance. Also keep in mind that brittle or latent connections as well as wrong answers also count as “non-available”.

- Achieving 99,9% availability (“3 nines”) is not easy at all. You need careful planning and design of dedicated measures to improve availability like redundant nodes, automated health checks and failover, thorough monitoring and alerting, zero-downtime deployments and alike. Going for this level of availability will definitely increase the development, test and runtime costs of your application significantly.

- Every additional “nine” of availability will roughly increase your costs and efforts by an order of magnitude.

An on-premises data center operation teams typically offers SLAs promising around 99,5% availability excluding planned downtime. This means a bit less than 2 days of unplanned downtime per year plus the downtime for planned maintenance like installing new releases and patches of the application and the underlying infrastructure components and OS, upgrading hardware, and alike. The overall availability typically is around 99%, i.e., up to 4 days of cumulative downtime per year. 1

AWS offers an instance-level SLA of 99,5% (including planned downtime) for a single EC2 instance which is in a similar range like the typical offerings of the on-premises data center operation teams SLAs 2. Other cloud providers offer comparable SLAs.

Adding nodes

Now let us add more nodes to the picture. We assume a node availability of 99,5% including planned downtime which is quite a good guarantee and not easy to achieve as we have seen before.

If adding more interacting nodes, the availabilities of the nodes multiply. As availability is a number < 1 (or < 100% using percent notation), the resulting availability will be less than the lowest availability of any of the nodes involved.

- If 10 nodes (applications, services, other components like databases, directory servers, etc.) are involved in answering an external request, the overall availability of the 10 nodes needed to answer the request is 95,1%. This means, in average 1 out of 20 requests will fail.

- If 50 nodes (applications, services and other components like databases, directory servers, etc.) are involved in answering an external request, the overall availability of the 50 nodes needed to answer the request is 77,8%. This means, in average 1 out of 4 requests will fail.

Now look at your system designs. How many nodes need to be available for reading information online? Do you need to reach out, e.g., to a customer system/service, a contract system/service, a product system/service, and more, which in turn consist of several distributed parts like web server, application server, database server and more – not even mentioning the infrastructure parts required like IAM server using an independent directory server, reverse proxies, and, and, and.

The same is true for completing write requests. How many nodes need to be available to accept writes of your updates online? Especially if the update needs to be propagated to several systems transactionally as we still see as a requirement surprisingly often, the number of nodes involved can quickly become surprisingly high.

I have seen a lot of designs where 10 nodes is sort of a lower bound and where the 50 nodes are hit surprisingly often.

Adding the network

Up to here, we only talked about the availability of single nodes and neglected the network between the nodes. So, let us add the imponderabilities of the network between the nodes to the picture.

In the 1990s, Peter Deutsch framed “The Eight Fallacies of Distributed Computing” (including one contribution by James Gosling):

- The network is reliable

- Latency is zero

- Bandwidth is infinite

- The network is secure

- Topology doesn’t change

- There is one administrator

- Transport cost is zero

- The network is homogeneous

All of the above are fallacies, i.e., the opposite is true. Just to add two examples of the implications of these fallacies, taken from the paper “Highly Available Transactions: Virtues and Limitations” by Peter Bailis et al.:

“A 2011 study of several Microsoft datacenters observed over 13,300 network failures with end-user impact, with an estimated median 59,000 packets lost per failure. The study found a mean of 40.8 network link failures per day (95th percentile: 136), with a median time to repair of around five minutes (and up to one week).”

Just let these number sink in. And even if it is a sport across many software developers to mock Microsoft: They know how to build excellent data centers! They really do! Much worse numbers are to be expected from any normal on-premises data center.

The second example is taken from the same paper:

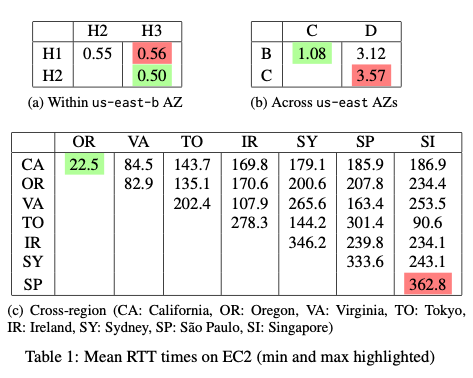

As you can see, inside a single availability zone, the mean RTT (round trip time) is below a millisecond (ms). Latency is not an issue, unless something else is broken. Inside a region, everything still is quite okay: Up to 4 ms RTT – still not a big deal. But as soon as you leave a region and add cross-region communication, latency goes up: Around 20 ms at best and up to almost 400 ms just due to physical constraints. The speed of light delimits how fast information can flow across the globe.

We additionally need to note that the numbers from AWS are still very impressive. They are quite close to the physical limits because they use dedicated lines, sometimes even their own cables to connect their regions. If you need to connect your data centers via non-dedicated lines, lower bounds of 20-50 ms are quite normal, even if you just connect two sites a few kilometers apart.

And we only talked about mean latency. If we talk about the 95th or 99th percentile, the numbers will be a lot higher – typically an order of magnitude or more.

All this adds to the (non-)availability of your system landscapes, both the regular network outages as well as the latency you will experience all the time.

This means, our overall system availability will be clearly below the availability we get if we only take the nodes into account. The network adds its share of non-availability to the figures.

Back to the 100% availability trap

What does this all mean for the 100% availability trap, i.e., assuming that all remote peers, including infrastructure tools like databases, message brokers and alike, are available 100% of the time, that they are never down?

It means that if you write your code with the 100% availability trap in your mind, most likely the availability of your resulting system will be – well – improvable.

And if you remember the continually growing number of peers from the first post of this series, developing software with such an attitude is a guarantee for ending up with availability problems at runtime.

There are few situations where “works on my machine” has less informative value than working in a distributed system environment.

In the end, we can state:

The question is not, if failures will hit you in a distributed system landscape.

The only question left is, when and how bad they will hit you.

Hence, we better get rid of the still widespread 100% availability trap and brace ourselves for failures that will hit us at runtime – systems not being available when we expect them to, network connections being temporarily down or latent, and so on.

Google once experienced that their developers fell into the 100% availability trap regarding their distributed lock service Chubby: Most developers wrote their software expecting Chubby being 100% available. The result were massive outages whenever Chubby did not work perfectly.

The interesting thing is how Google responded to that situation. Most companies would have tried to improve the availability of Chubby as it obviously was the “root cause” of the outages, feeding the 100% availability trap in the minds of their software developers.

Google chose a different path. They started to shut down Chubby regularly. This way, all developers using Chubby could not rely on Chubby being available all the time and needed to handle the situation that Chubby is down in their code. As a result, the Google developers did not fall for the 100% availability trap anymore (at least with respect to Chubby).

Summing up

In this post, we discussed that the failure modes discussed in the previous post are not just a theoretic concept but that they actually affect us at the application level.

We discussed the 100% availability trap, assuming that all remote peers, including infrastructure tools like databases, message brokers and alike, are available 100% of the time, a trap that many developers (and other people) still fall into.

We discussed availability in more detail and have seen how multiple peers and the network reduce the overall system availability. This means that with the 100% availability trap in your head you will build systems that exhibit poor availability at runtime.

Or to phrase it a bit differently:

Failures will hit you in today’s distributed, highly interconnected system landscapes.

They are not an exception. They are the normal case and you cannot predict when they will occur.

Hence, we need to embrace failures and prepare for them to create systems that offer an availability being higher than the availability of the underlying parts involved.

In the next post, we will discuss in more detail why these failures will hit us at the application level for sure and we cannot leave their handling to the operations teams as we did in the past. Stay tuned … ;)

-

I know about some mainframe people who turn up their nose at such numbers as they guarantee 99,7% availability including planned downtime. Still, if you dig deeper, they will admit they usually only guarantee these numbers while their transaction monitors are up. Taking into account that the transaction monitors usually are down all night and at the weekends, the overall numbers are not that impressive anymore. Nevertheless, mainframes are impressive pieces of hardware and OS/infrastructure level software and they give you quite good availability out of the box. But then again, this availability comes at a correspondingly high price. Thus, going for the same guarantees on microprocessor-based hardware would not be any more expensive in terms of money and effort. ↩︎

-

Note that the 99,99% availability promise for the EC2 service refers to EC2 as a service, not to a single EC2 instance. ↩︎

Share this post

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Pinterest

Email